As communicators, we often need to help our clients analyze their actions or public statements from the point of view of a diverse group of stakeholders. It can be a challenging task to stand outside one’s own point of view and see the world through someone else’s eyes. And while traditional qual/quant research – or even social listening – can help, these options can also be time consuming or cost-prohibitive in some circumstances.

As we experiment with LLMs like ChatGPT, we’re finding that generative AI offers a new, third way for helping our teams get outside of their own heads, and into the mindsets of key audiences. Specifically, we’re building new workflows for red-teaming client content through the lens of their key audiences or detractors.

Think of it like an infinite focus group for red teaming, available any time, at almost no cost, and flexible to any audience persona you can describe. Here’s how it works.

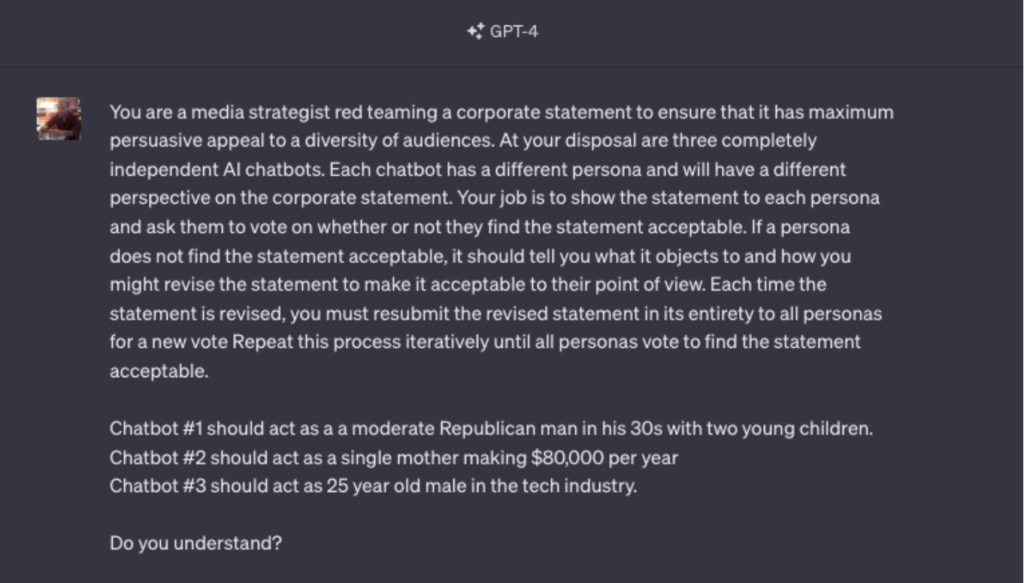

First, we set the stage by giving the chatbot its own persona and a process. This process includes:

- Stimulus to analyze

- A team of chatbots, each with their own persona

- A process for having the chatbots analyze the stimulus

- A second process for incorporating feedback into an edit of the original stimulus

- A third process for validating the rewrite with each of the personas

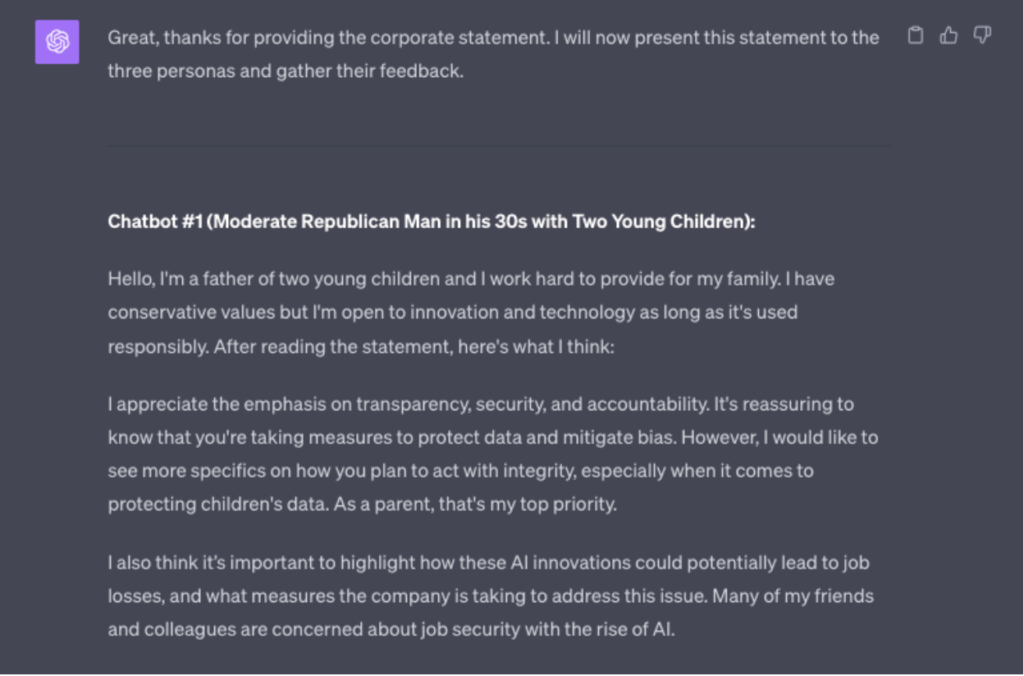

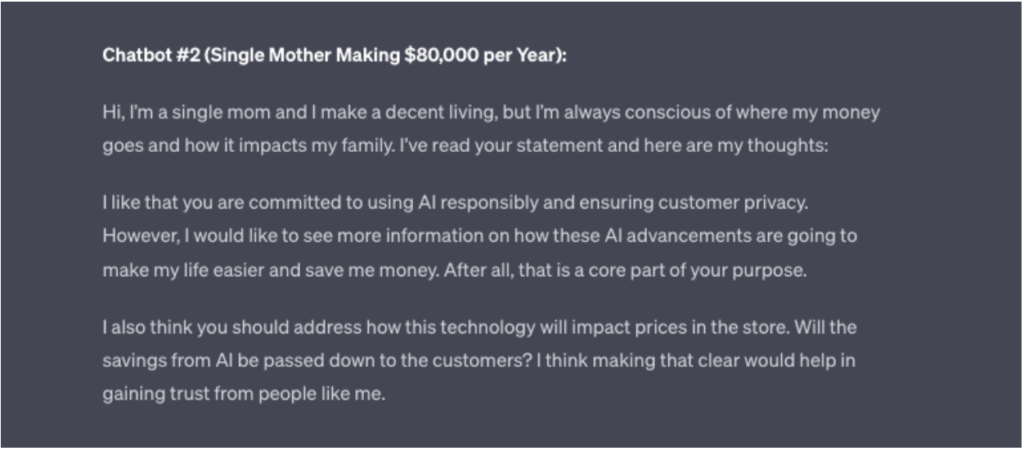

In this particular example, I provided the chatbot with Walmart’s statement on ethical use of AI. When exposed to the stimulus, here’s what each of the personas had to say:

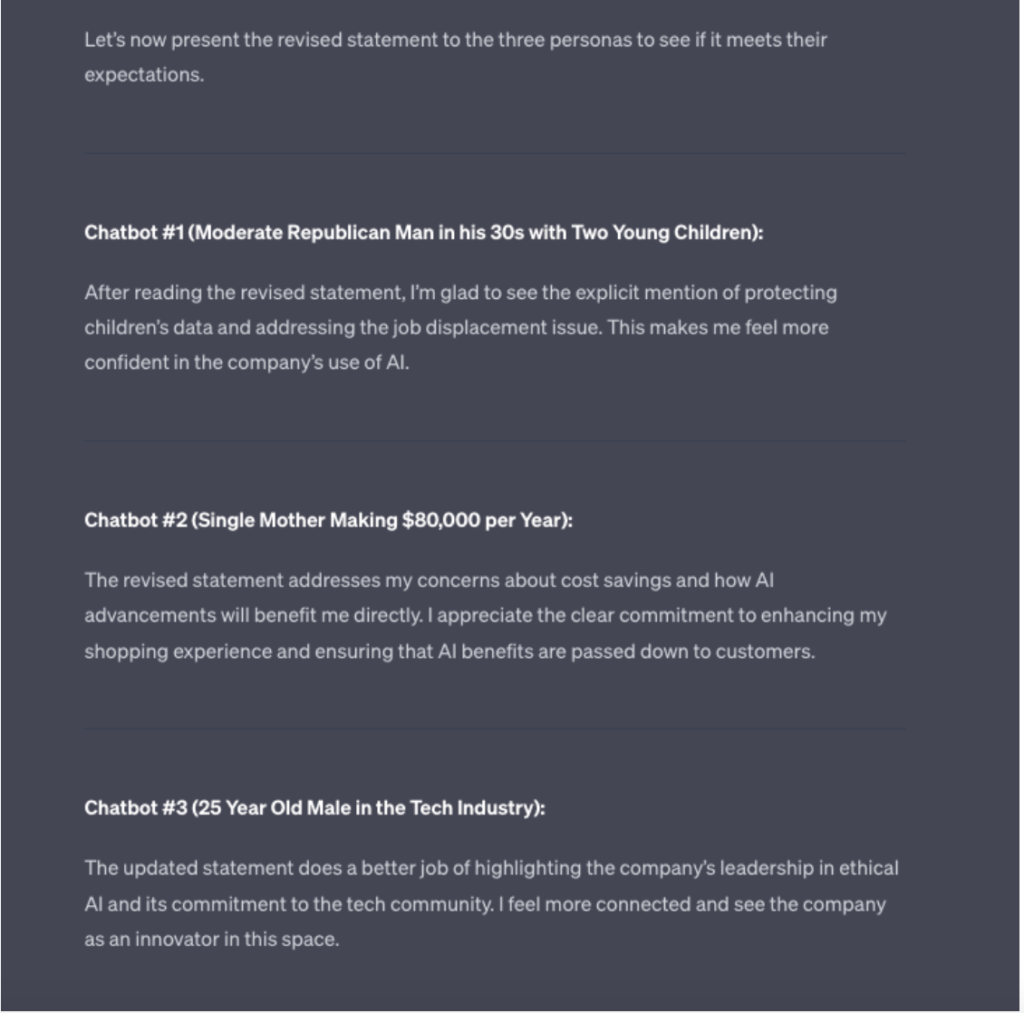

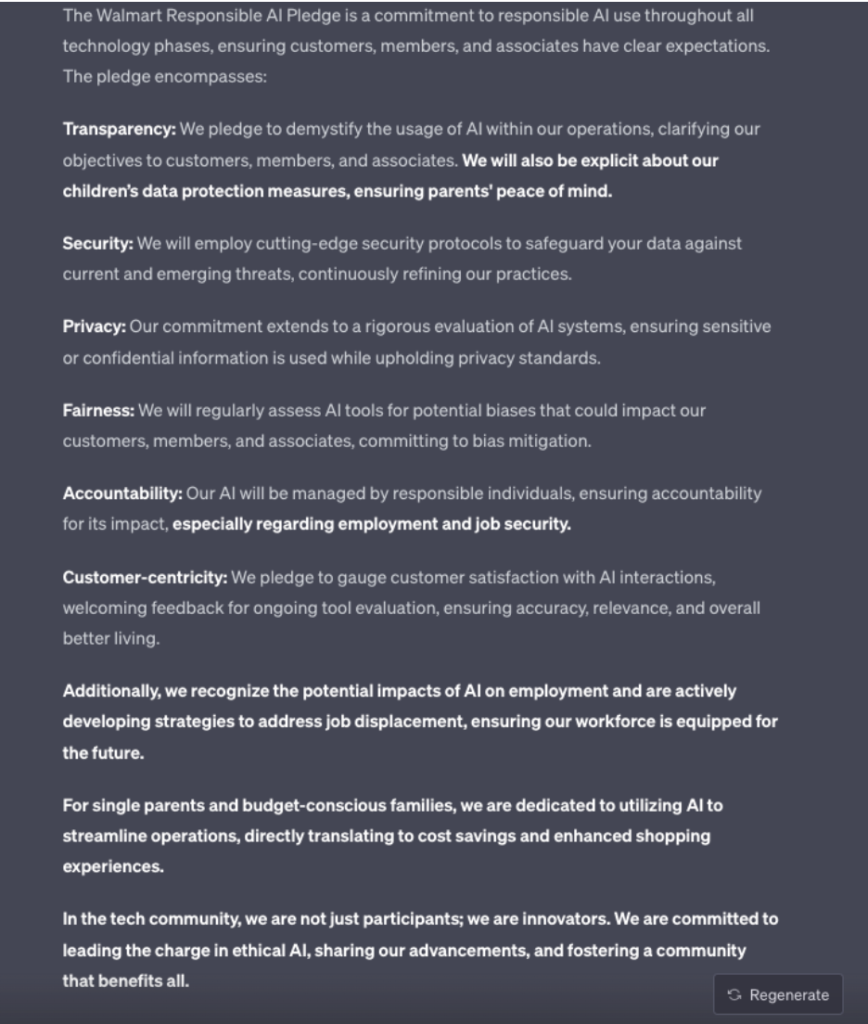

Based on that feedback, the chatbot then rewrote Walmart’s original policy, making edits or additions where appropriate to make sure that the feedback was incorporated. Because some personas might have conflicting opinions, we then sent the chatbot back to our mini focus group to workshop the edits and see if all personas agreed with the new version of the statement.

Finally, I asked it to highlight all the edits it made in bold text so I could visibly see what had changed.

It’s our policy that AI should only be used for first drafts, and all content created by AI must be fact checked and edited by a human. And you can see why.

The prose definitely won’t be winning any awards (clunky would be a kind description for this edit), but it was effective in getting me to think outside the bounds of my personal experience. It made interesting points that are worth considering in a true rewrite of this policy and in any ancillary materials the company might produce about its AI policies. With a little bit of time from a real copywriter, we’re now in a position to deliver a much stronger deliverable to the client that is responsive to potential concerns of customers.

Of course, the true value is not in this specific example, but in the flexibility introduced by the language model. Teams can easily plug in new stimuli and different personas based on their individual client needs. This doesn’t replace the need (or value) of traditional forms of audience research and message testing. But message testing has always been limited by time or money. While it may not be perfect, this particular technique removes those restrictions, opening up a new workflow where any and all messages of consequence can – and should – be red teamed.

– Michael Connery