In 2016, Joy Buolamwini, founder of the Algorithmic Justice League, demonstrated racial bias in facial recognition systems by simply holding a webcam to her face. The machine learning model used to identify users was trained on a generic dataset, with limited skin tones and facial features. The MIT research assistant and data activist was rendered invisible.

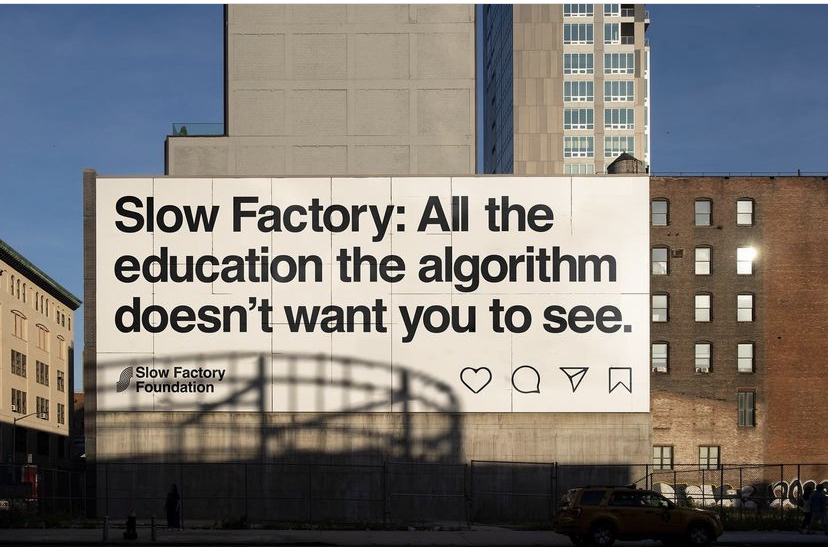

Within the last month, many activists, educators, and celebrities using their social platforms to bring awareness to ongoing global crises turned to their communities to ask the question – “can you see me?” As conversations around AI and platform moderation have intensified, users began to voice concerns over being unfairly flagged for violating community guidelines and noting substantial drops in engagement and impressions. Others found their posts to be deleted altogether believing they’re being shadow-banned. However, what is perhaps most striking about these conversations is how embedded they are in speculation. How does it all work?

The short answer is, we don’t fully know…yet. In the meantime, the algorithm has become synonymous with the bad guy and dare I say, a scapegoat. As platform users look to someone or something to hold accountable, superficial narratives surrounding the ominous algorithm swirl and spiral into conspiracy theories of their own. However, it’s critical to look further. While it’s correct to identify an algorithm as the cause, the root of the issue lies in the data it’s built on.

For AI to work, it requires a massive dataset. For AI to work well, and to minimize bias, it requires a massive, diverse, AND inclusive dataset. So in the case of Buolamwini’s experience with facial recognition, the machine learning model must be trained on every possible quantifiable, physical, human facial characteristic. From our eye color to the distance between our eyes, the shape of our chins, our skin tones and beyond, these features are all converted into mathematical representations to enable recognition. The same goes for natural language and social media platforms. If communities are moderated using generic language models that do not account for nuance and diversity, voices that deviate from the ‘norm’ in any way will inadvertently be perceived as a threat and ultimately removed.

Automated content management systems like the ones used to flag users and their content are prone to error. But as we look for solutions, is it enough to migrate to other platforms or build our own when the technical infrastructure is the same and our tools are so interconnected? Marrying intent with technology begins with transparency and clarity.

While mega-platforms such as Instagram and Twitter have acknowledged blindspots in their software, they have yet to provide a tangible ‘how’ users are moderated. On the other hand, in an unprecedented move, Playstation recently published a patent outlining it’s protocol for banning users who behave inappropriately based on a range of inputs including voice, gaze, and gesture. Although the process does not outline specific actions that violate code of conduct or the weights assigned to them, it’s a step in the right direction providing clarity on how Sony is holding members of its gaming community accountable.

As Buolamwini puts it in her TED Talk, “giving machines sight” warrants collective civic engagement. Advancing truly inclusive AI is a joint effort. We can’t afford to discuss, understand and challenge how tech works using loose or blanket terms any longer since we risk further absolving ourselves of any real responsibility. We also cannot understand how the tech works on our own either.

In Weapons of Math Destruction, Cathy O’Neil outlines three characteristics of problematic algorithms. They’re opaque, unregulated, and difficult to contest. And we’re witnessing their implications daily on our timelines and news cycles. To begin to correct course, platforms need to be transparent. Not just about the algorithms, but the datasets that fuel them. In doing so, we can hold them accountable to improve the platform and deliver more equitable experiences across the web and inevitably IRL.

Historically, we’ve found humor in not fully understanding the underpinnings of our tech. And we’ve found comfort in collectively relinquishing control to the experts so much so we’ve made celebrities of Musk, Zuckerberg and Dorsey. Deferring to tech leaders is a luxury we could not and will never be able to afford. Algorithmic bias travels quickly and exacerbates inequalities in all corners of life. It’s time we embrace the technocrat in all of us, stop making excuses on behalf of technology and instead actively engage in improving it. We’re up against the clock.

Marcha Johnson